We evaluate our model with a 323 voxel grid on sparse, unstructured light fields.

Datasets. We use all 8 scenes from the NeRF Real Forward-Facing dataset.

Baselines. We observe better reproduction of view dependence, sharpness, and overall improved perceptual fidelity of our method over NeRF, while rendering much faster. We can sometimes struggle when there exist thin features with large disparity and complex occlusions (see the Fern sequence).

We evaluate our model without subdivision on dense 5x5 light fields.

Datasets. We use all 12 scenes from the Stanford Light Fields dataset.

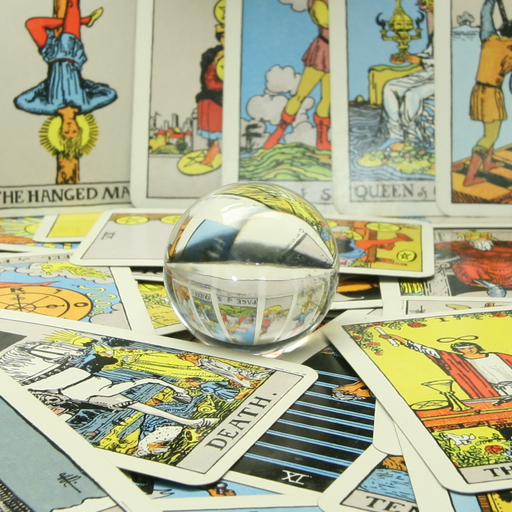

Baselines. NeRF interpolates in a geometrically consistent manner, but fails to capture high frequency view dependence such as reflections and refractions. X-Fields also struggles with high frequency view dependence in some cases, and can also exhibit minor ghosting artifacts. We render much faster than NeRF, and about half as fast as X-Fields. Both baselines, in general, have worse sharpness than our method—however, our embedding network struggles to identify correspondences and interpolate on larger baseline sequences such as Tarot (Large) and Knights.

Sparse

Dense

We evaluate alternative embedding approaches, including our model without an embedding network and our model with feature space embedding. All experiments on sparse data are for our subdivided model with a 323 voxel grid, while no subdivision is used for dense data.

Datasets. We use 8 scenes from the NeRF Real Forward-Facing dataset and 12 scenes from the Stanford Light Fields dataset.

Baselines. Local affine-transformation embedding leads to the best overall qualititative and quantitative performance. For some large baseline scenes, such as Tarot (Large), feature embedding can lead to better interpolation of refractive effects, since it does not implicitly enforce local planarity of color level sets within the light field.

We evaluate our subdivided model with different resolution voxel grids, from 43 to 323.

Datasets. We use 8 scenes from the NeRF Real Forward-Facing dataset.

Baselines. Even with low resolution voxel grids, our model still produces accurate image predictions, albeit with slightly reduced geometric consistency.

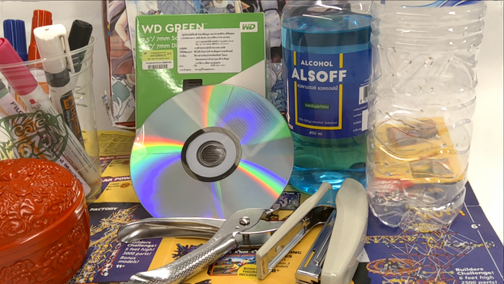

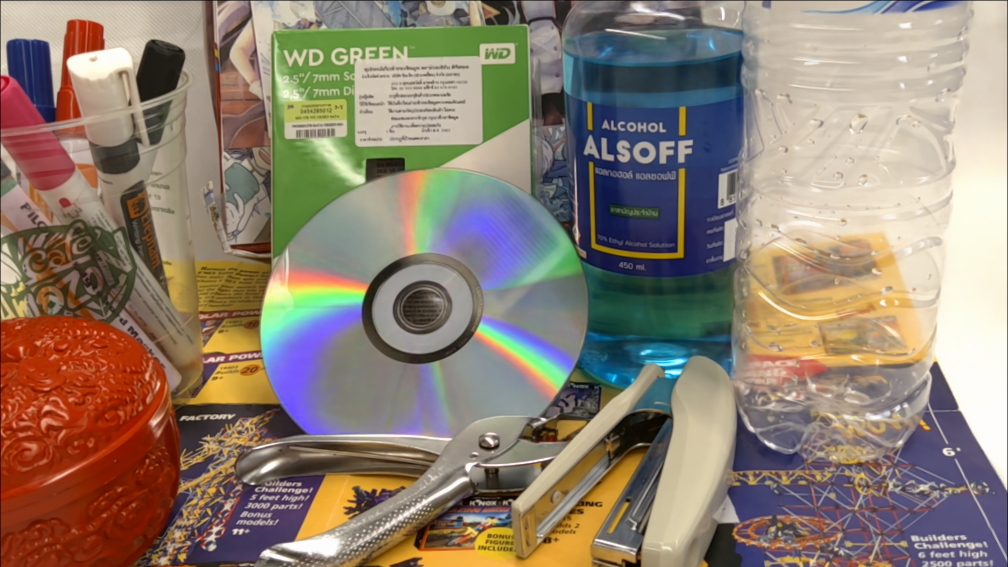

We show qualitative results on unstructured, dense sequences within the Shiny dataset.

Datasets. We use 2 scenes from the Shiny Dataset—CD and Lab.

Baselines. We observe better reproduction of view dependence for heldout views, specifically of reflections and refractions. Observe the refractions in the liquids in both sequences, and the reflections on the CD and textbook.

We show spiral trajectories for a select few scenes.

Datasets. We use 3 scenes from the NeRF Real Forward-Facing dataset—Fern, Horns, and Leaves.

Baselines. For the Fern scene, our model exhbits some artifacts around the fern leaves, which improve with student teacher training. For the Horns scene, we better reproduce the many glass reflections. For the Leaves scene, although we have worse quantitative performance on PSNR and SSIM (and some floating artifacts near the boundaries of the images), our models with and without student teacher training have better sharpness than NeRF.

We show spiral or linear trajectories for a select few scenes.

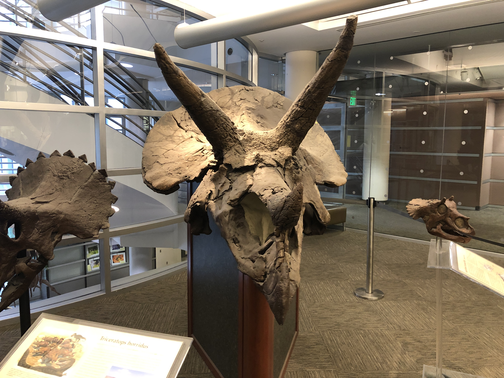

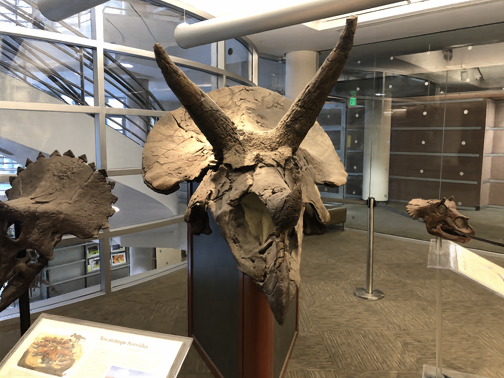

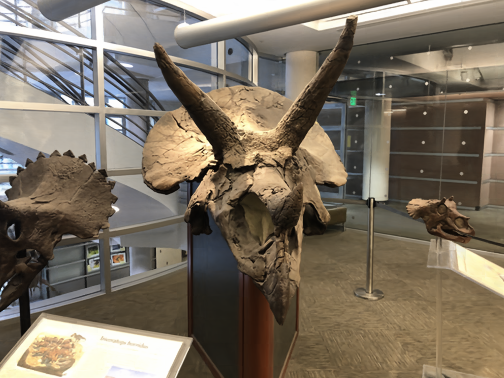

Datasets. We use 3 scenes from the Stanford Light Fields dataset—Treasure, Bulldozer, and Tarot (Small).

Baselines. As noted previously, we show better sharpness and reproduction of view dependence than both baselines. Specifically, note the reflections on gems in Treasure, the reflections on the bulldozer arm in Bulldozer, and the refractions within the glass ball in Tarot (Small).

We compare our embedding approaches in video form for a single dense scene.

Datasets. We use the Bulldozer scene from the Stanford Light Fields dataset.

Baselines. Our method without embedding leads to predictions that are severely distorted, and that do not maintain multi-view consistency. Our feature embedding approach exhibits low frequency "wobbling" artifacts that are obvious when the video is viewed in full screen. Our local affine-transformation embedding approach leads to the best overall quantitative performance, and multi-view consistency.